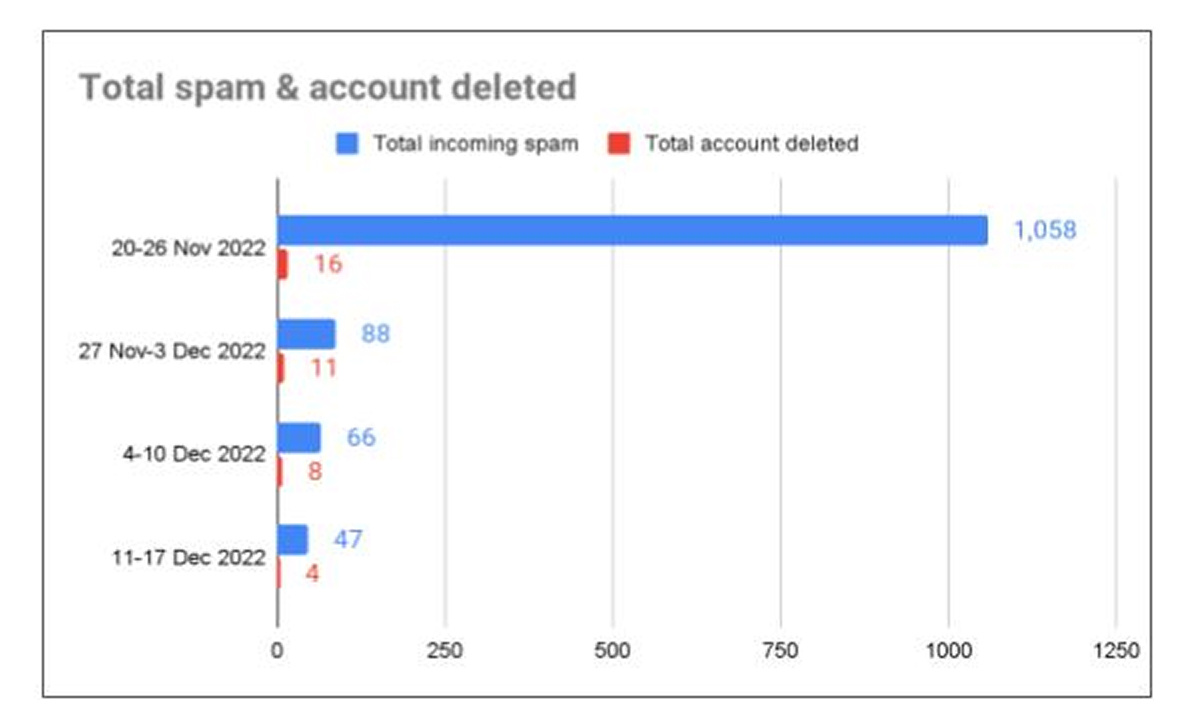

As our jobseeker app gained traction in late 2022, user engagement on the social feed reached an all-time high. However, this rapid growth brought an unexpected challenge.

With increased visibility came a surge in spam and scam activity on the social feed. Malicious actors exploited the platform's reach, flooding the feed with irrelevant and harmful content. As a result, genuine users began to withdraw—hesitant to post or engage—undermining the feed’s core purpose of fostering authentic connections and meaningful community interactions. Addressing this issue was critical to restoring trust and ensuring the long-term health of our social ecosystem.

How might we enable better cross moderation to decrease irrelevant content on the app?

Lead Designer

One Quarter

(End 2022)

1 product manager

1 developer

1 QA engineer

3 content strategists

Figma

JIRA

Protopie

Ampltiude

Survicate

Spearheaded the anti-spam initiative, leading a small cross-disciplinary team to drive execution.

Researched and designed new content moderation strategies tailored to platform needs and user behavior.

Led cross-functional brainstorming sessions with the content team to develop effective moderation strategies.

Conducted user testing with internal teams and end users to evaluate internal moderation tools.

Facilitated usability testing with end users to validate proposed in-app moderation features.

Conceptualized and designed multiple content moderation tools within a single quarter.

Reduced irrelevant content from 60% to 3% in just one quarter.

Achieved a 95.5% reduction in spam within 4 weeks.

Established a clear moderation roadmap to guide the content team, product manager and engineers.

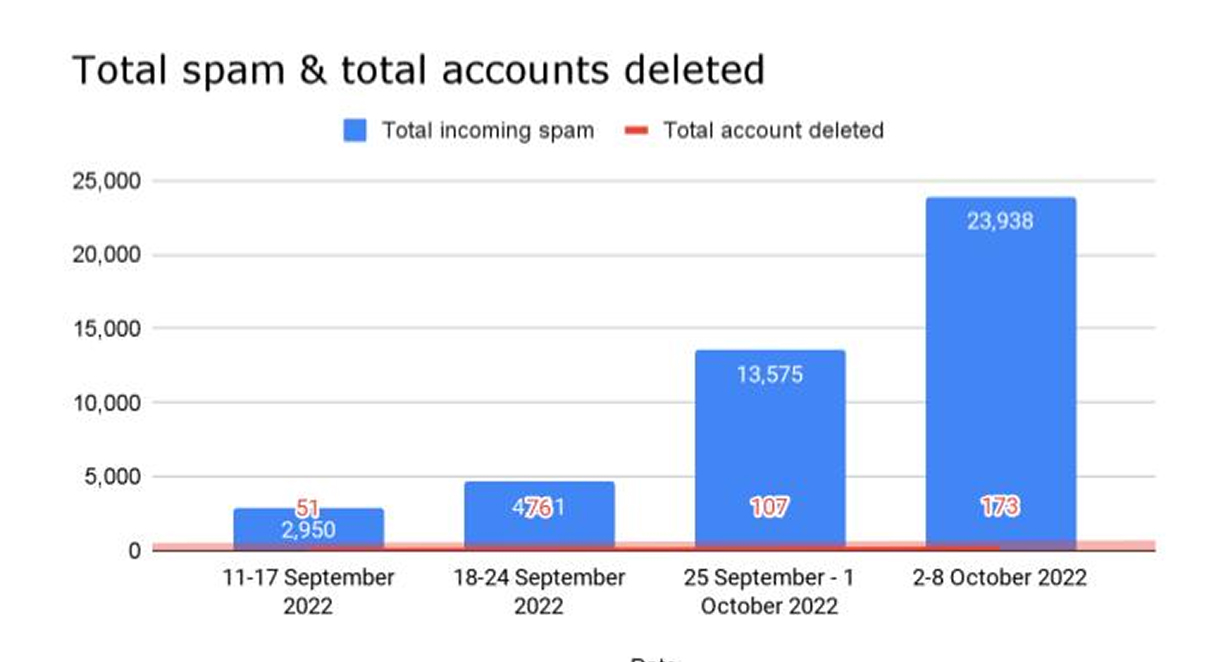

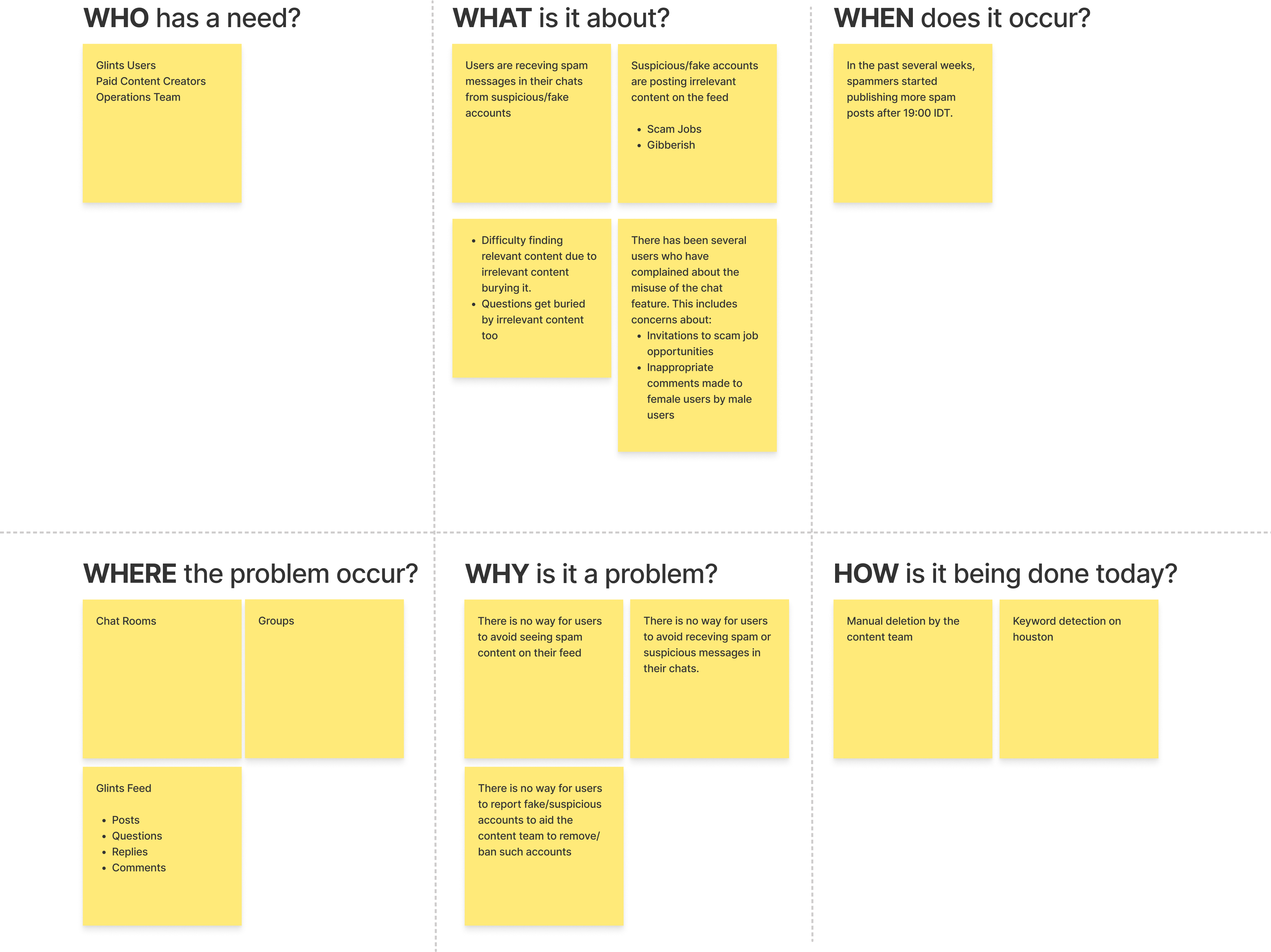

As spam attacks grew, we saw a sharp decline in trust and engagement from genuine users—putting both community health and business goals at risk. Combating spam became our top priority. I led the effort by mapping out where spam occurred across the platform to identify key vulnerabilities. This gave us a clear starting point to prioritize and execute targeted solutions.

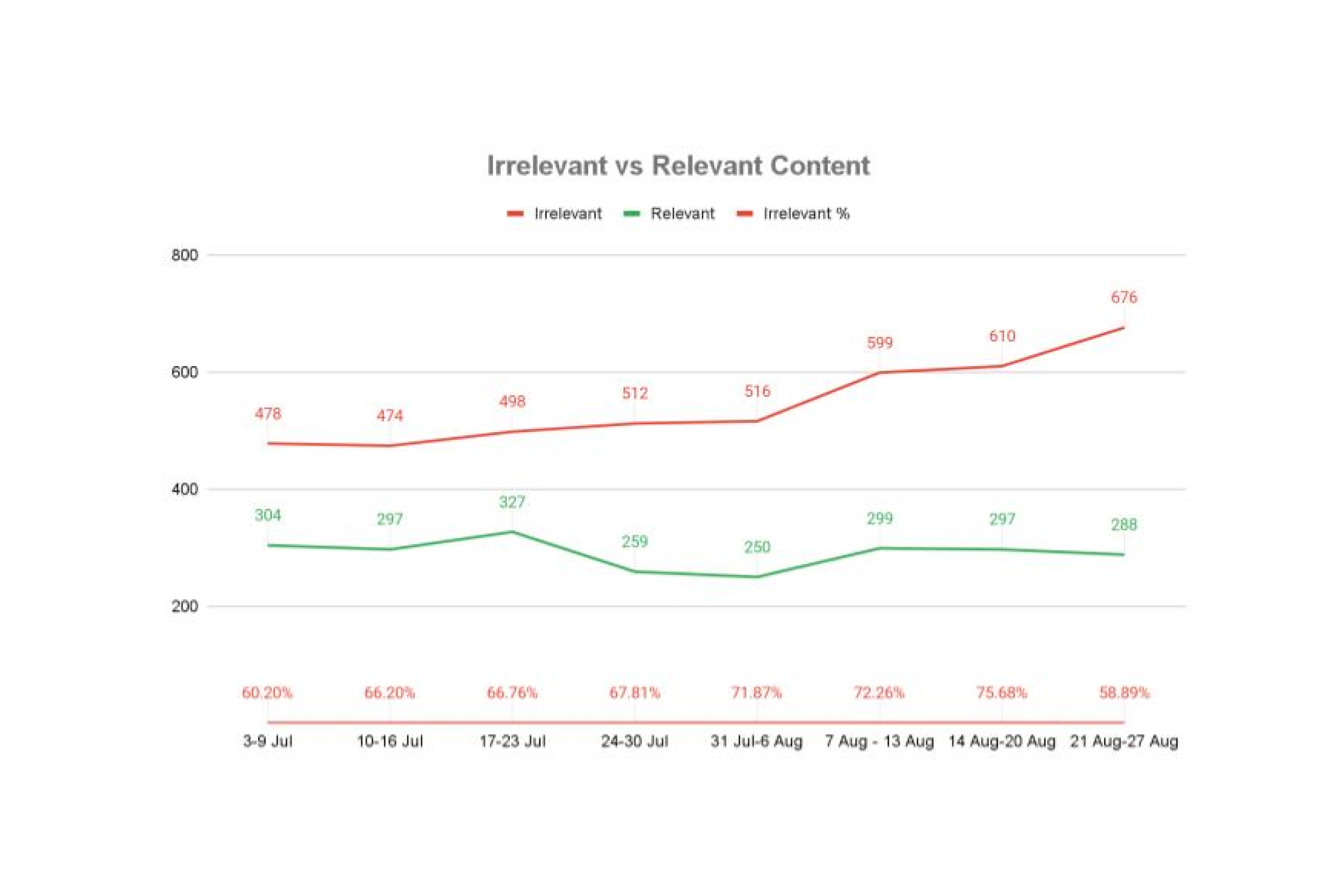

Irrelevant content was steadily increasing to over 60% in a matter of 3 months.

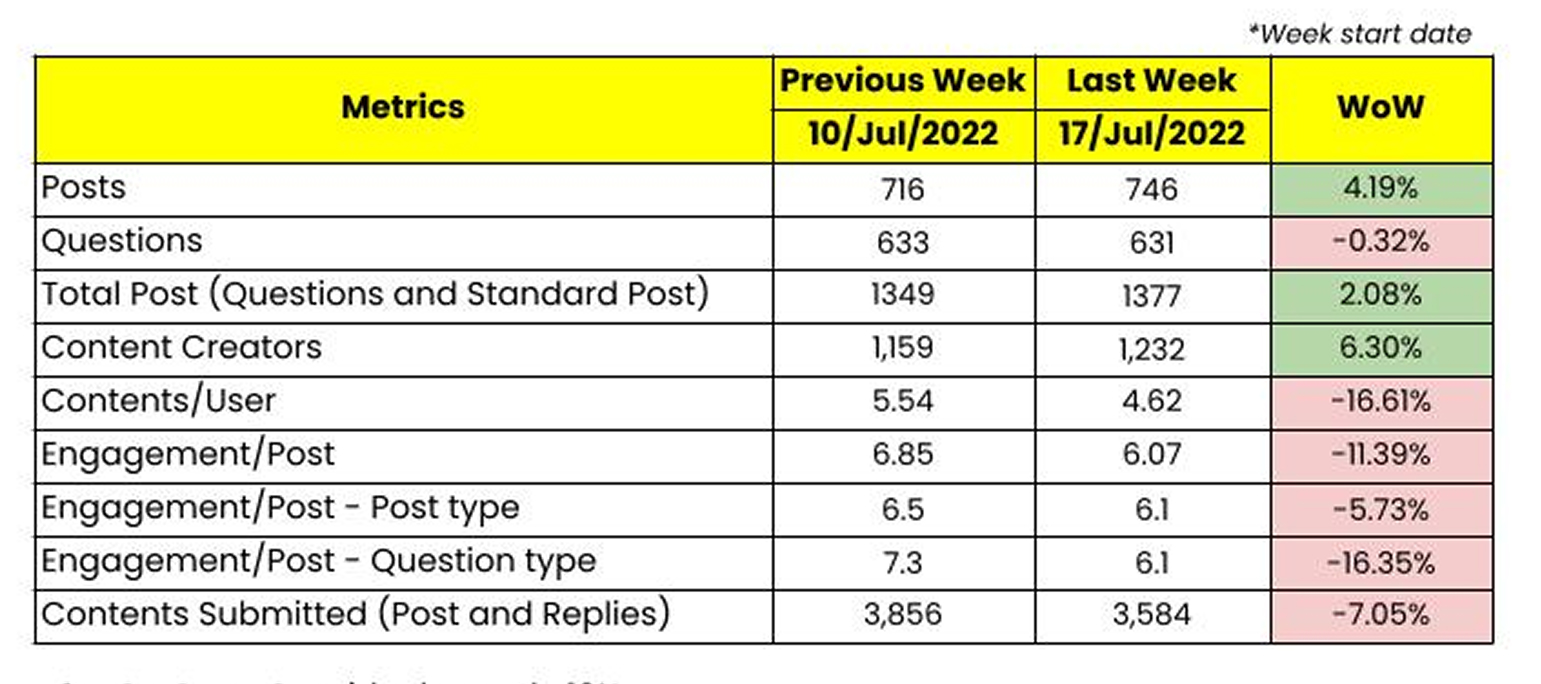

Users were discouraged to create content and use the social feed due to the amount of spam.

More spam content and accounts had to be manually deleted by the content operations team.

Fake accounts were being created to post phishing links, share irrelevant content, and send spam through the chat feature.

After looking at the current metrics and initial discussions with the content team, I gained a more holistic view of what was happening and how the content team were handling the situation.

Irrelevant content was manually discovered and deleted by the content team. Resulting in long man hours required.

Spammers noticed the work hours of the content team, and posted spam after work hours.

There was a an absence of content guidelines for users to refer to.

There was a lack of automated and user controlled moderation to help the content team with the amount of spam.

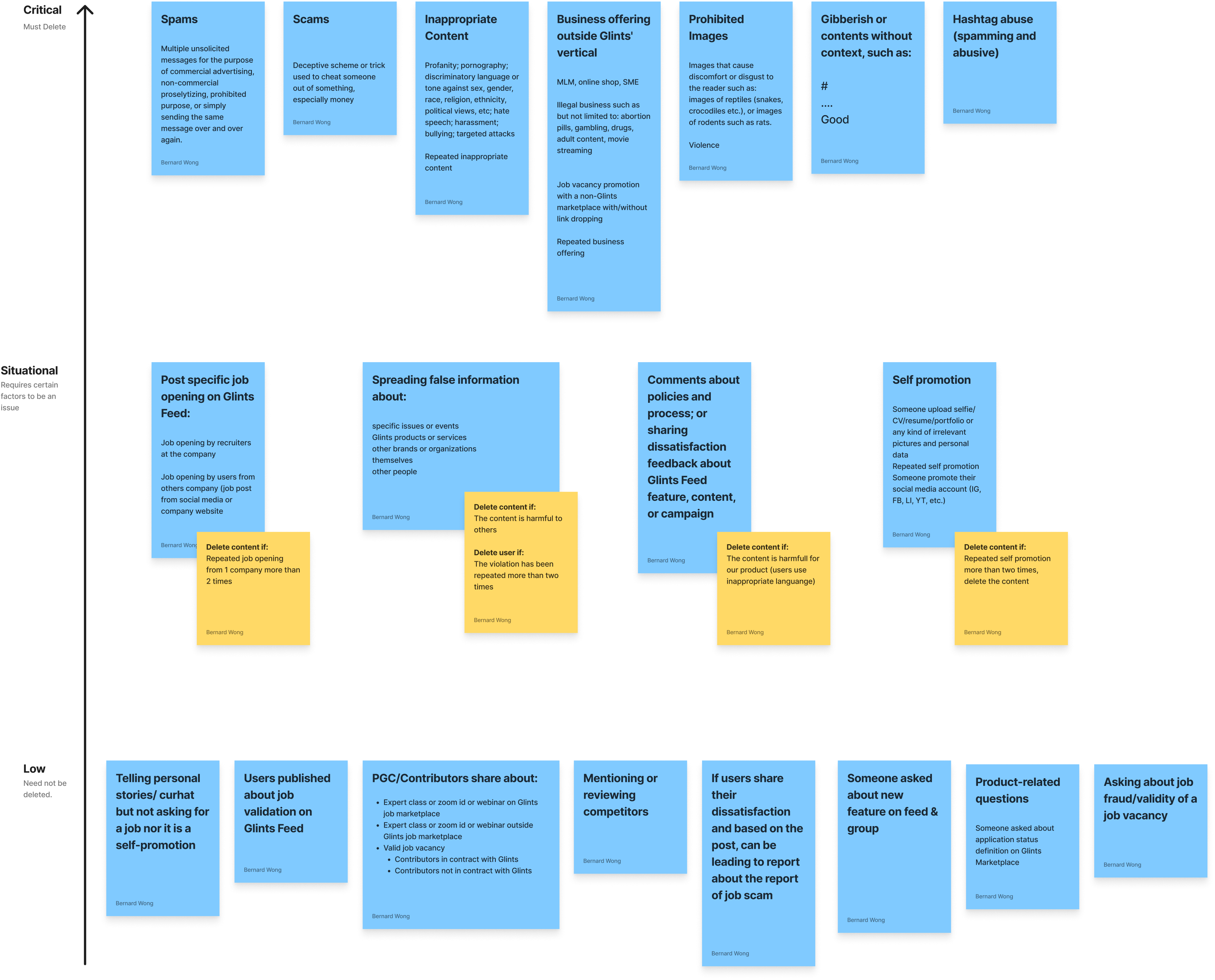

By analyzing data reports and working closely with the content operations team, I identified the most common types of spam and the areas they impacted most.

To streamline response efforts, I created a severity chart to help the team prioritize moderation:

• Immediate Action – Spam requiring urgent removal

• Situational Review – Content needing further context before action

This structured approach improved efficiency while maintaining a fair and balanced user experience.

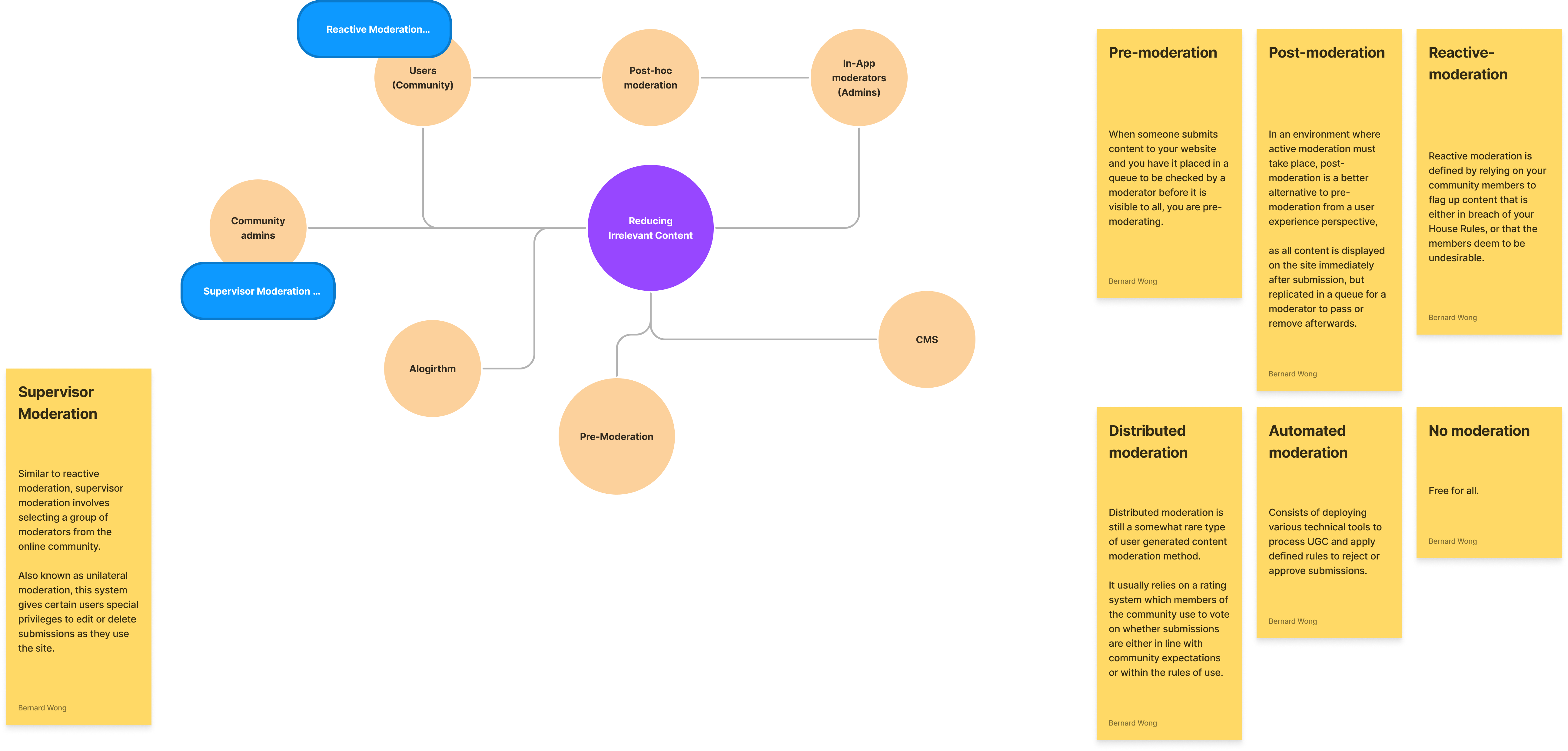

After defining the core problem, I conducted in-depth competitor analysis and research to generate actionable ideas. From this, I established key moderation pillars—prioritized by impact and implementation effort. These pillars served as the foundation of our strategy and were shared with all stakeholders to ensure alignment across teams moving forward.

To understand how other platforms tackled content moderation, I began by identifying the top social content apps used by our target users in Indonesia—Facebook, Twitter (X), and Instagram.I analyzed each platform’s moderation features, highlighting actionable insights we could adapt and integrate into our app to better suit our users’ needs and context.

-min.jpg)

With a clear understanding of available strategies, I created a service blueprint to map anti-spam measures across the user journey and platform touchpoints. This ensured seamless integration without compromising user experience.

The blueprint also clarified ownership by outlining the product team's responsibilities for each feature. Alignment sessions with management secured buy-in and paved the way for efficient execution.

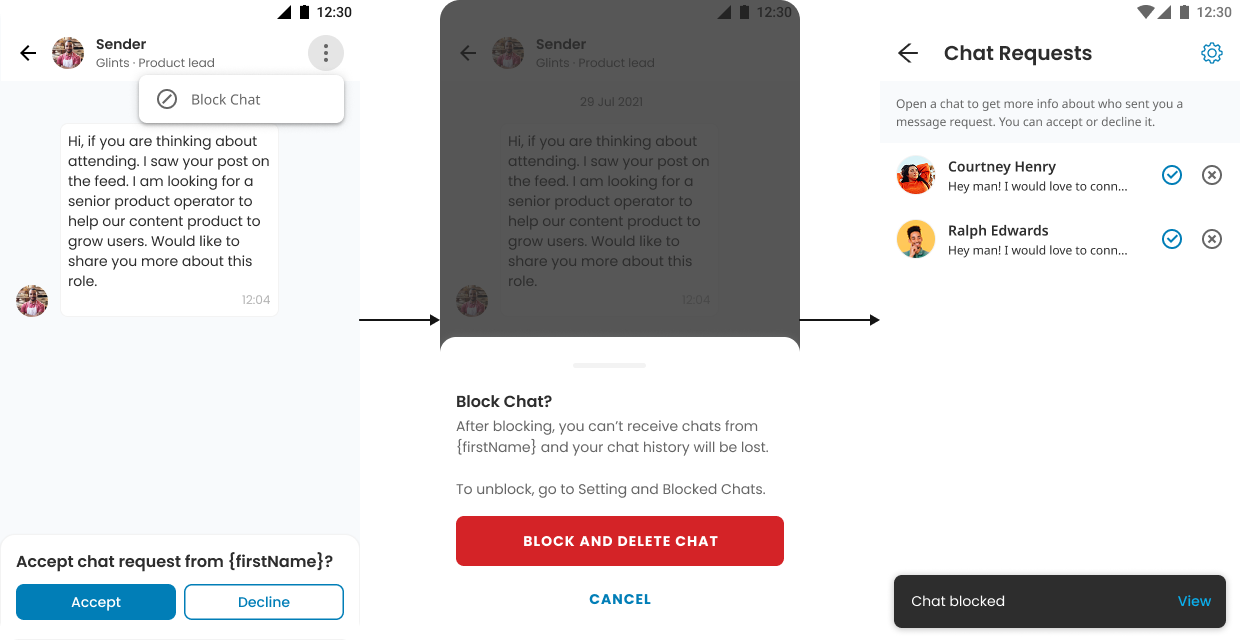

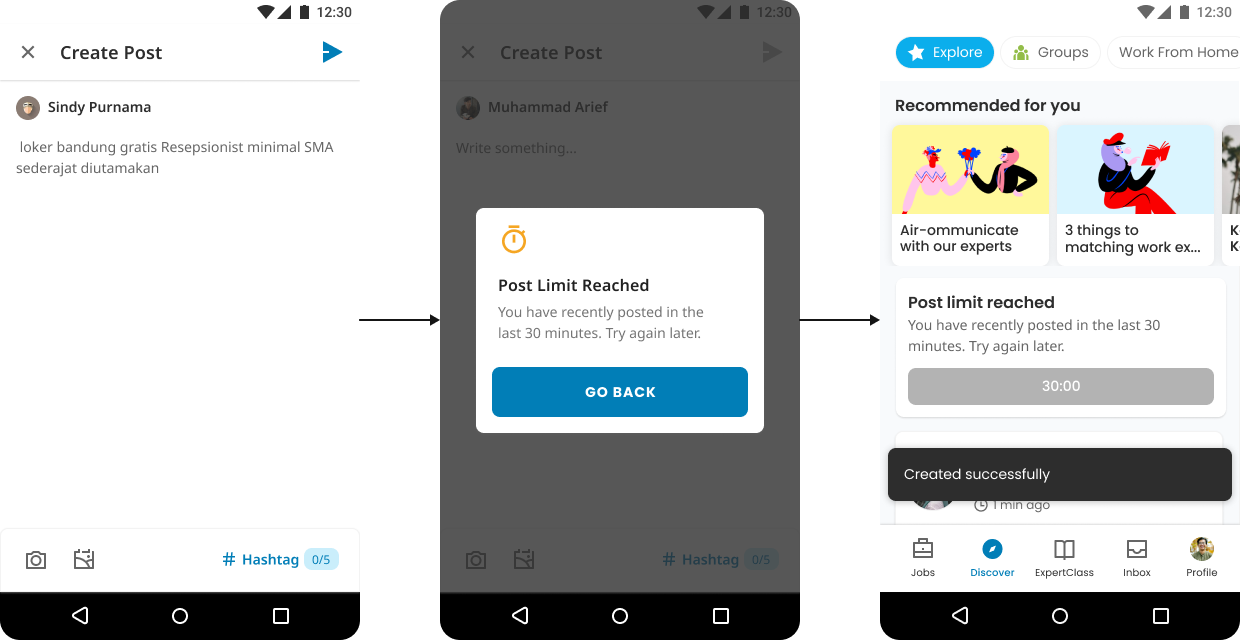

Posts or messages containing blacklisted keywords are automatically flagged and sent for review by the content operations team,

ensuring a proactive approach to maintaining a safe and spam-free environment.

Post authors were empowered to delete spam comments or replies on their posts, providing them with

greater control over maintaining the quality of their content.

Users contacted by spammers could block them and delete their chats, giving them control

to safeguard their experience and prevent further unwanted interactions.

User data showed most people post only once every 30 minutes, so we introduced rate limits to prevent spammers from

posting in rapid succession. This helped preserve content quality and cut down spam.

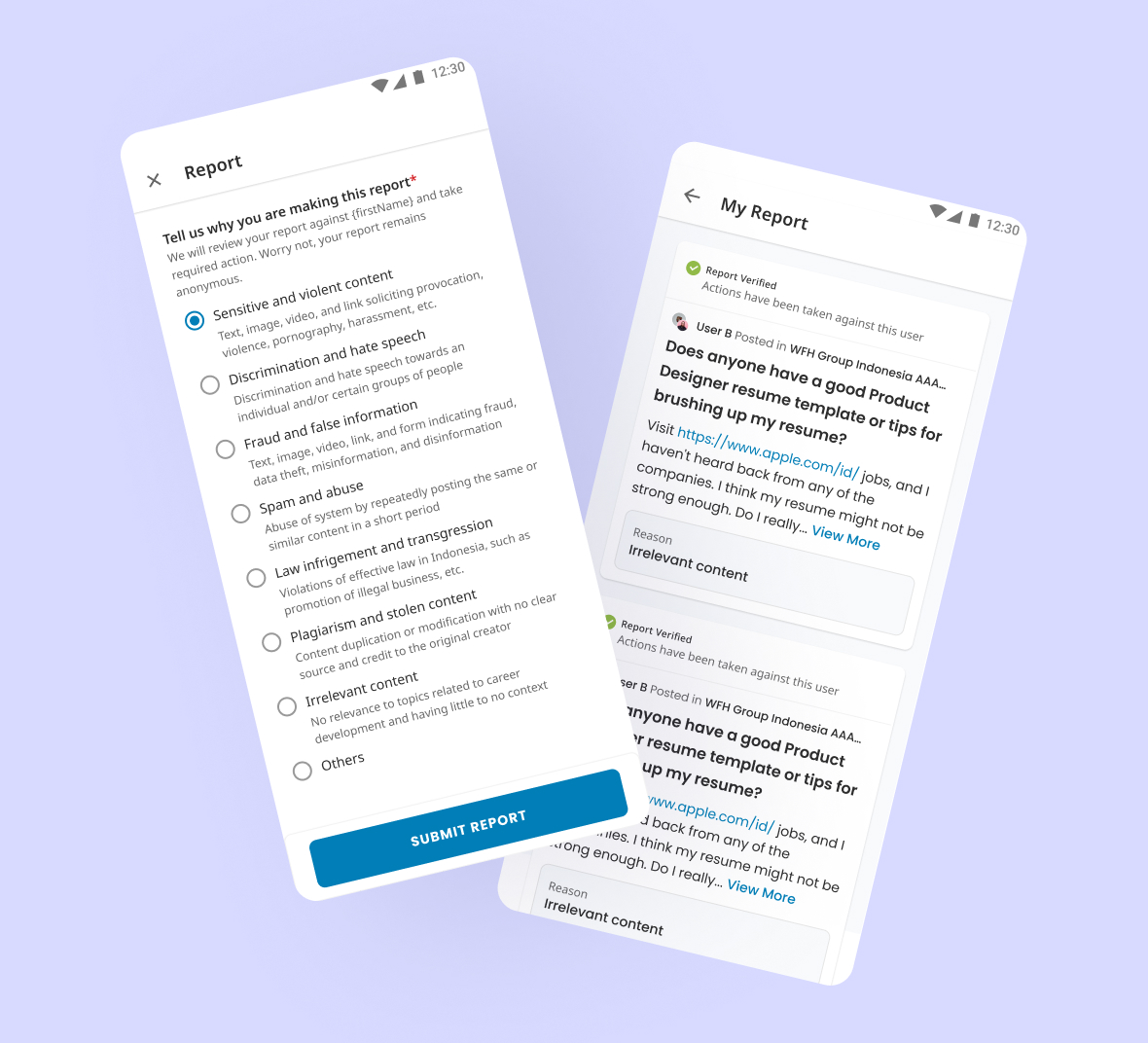

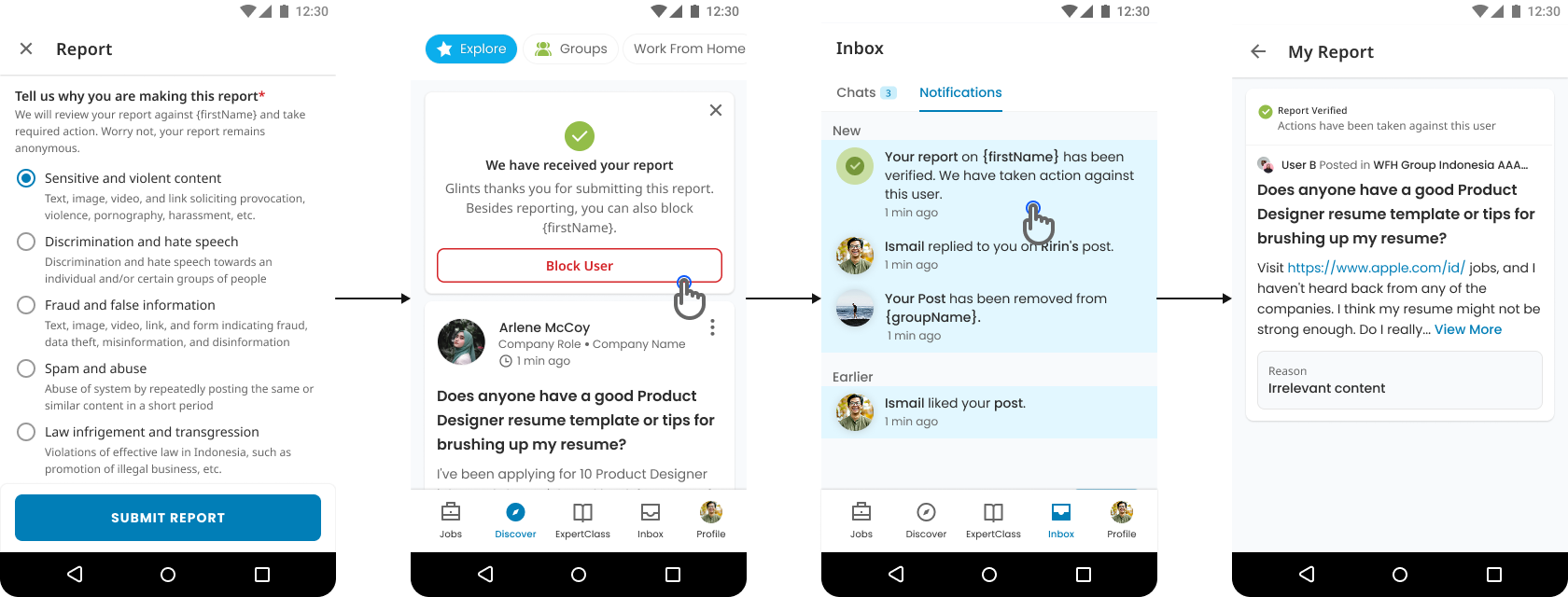

We introduced a reporting feature that let users flag spam, empowering the community to surface inappropriate content.

This reduced the need for manual late-night monitoring and improved the content team’s efficiency.

%201.jpg)

I would invest more time upfront in building shared language and success metrics with the content and product teams. While we aligned well later on, having clearer cross-functional definitions of “spam” and “harmful content” from the beginning would have helped us move faster and with more clarity.

Collaboration is key: Partnering closely with content ops and grounding decisions in real user data was critical to building effective moderation tools.

Small changes can create big impact: Features like user reporting and rate limits were simple to implement but significantly improved moderation effectiveness.

Balance matters: Effective moderation isn’t just about removing content—it’s about preserving the user experience while maintaining trust.

Use existing data early: Leveraging user behavior data early on helped us make targeted, informed decisions—saving time and avoiding unnecessary guesswork.

Speed vs. Depth: To act quickly, we prioritized fast-impact solutions over longer-term structural changes like machine learning filters.

Strictness vs. Freedom: Introducing stricter posting limits curbed spam, but we had to be careful not to restrict genuine user behavior.

Operational Load vs. Automation: Some manual processes remained, as full automation would have required more time and technical investment than our timeframe allowed.